Ollama 快速入门:OpenAI API 兼容

Ollama 现在具有与 OpenAI Chat Completions API 的内置兼容性,从而可以在本地使用 Ollama 的更多工具和应用程序。

设置

首先下载 Ollama并提取Llama 3或Mistral等模型:

ollama pull llama3用法

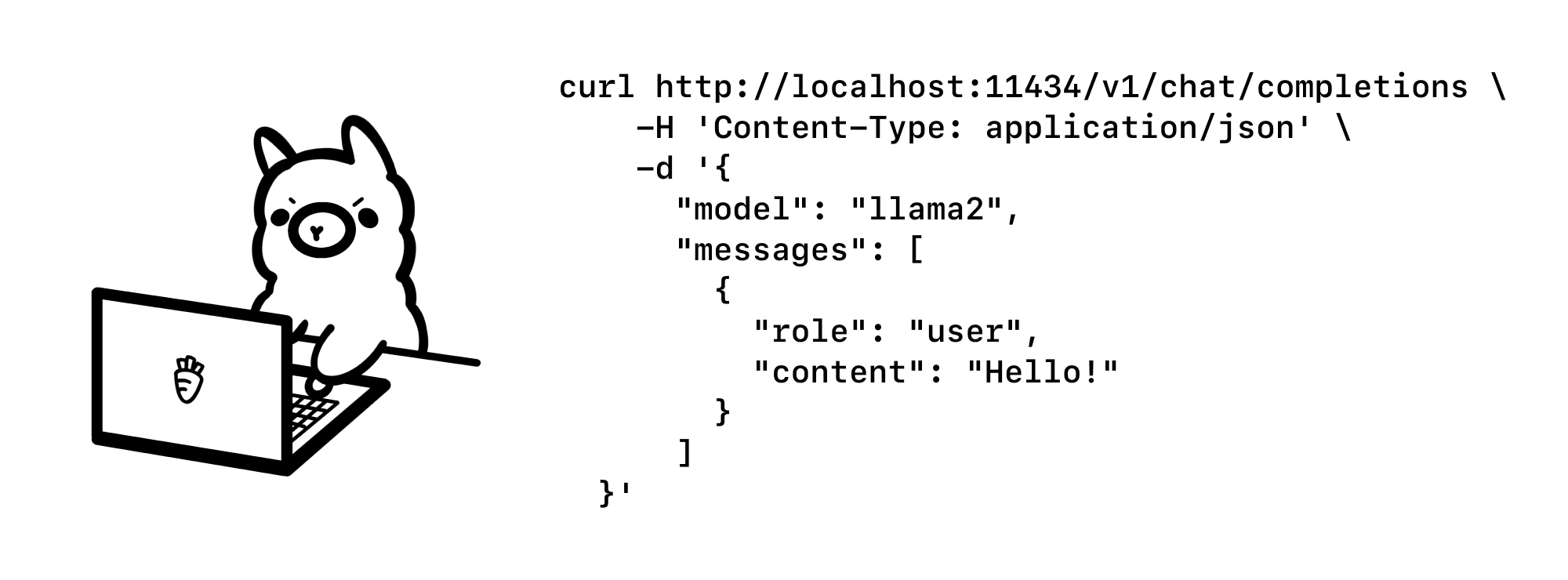

cURL

要调用 Ollama 的 OpenAI 兼容 API 端点,请使用相同的 OpenAI 格式并将主机名更改为 http://localhost:11434:

curl http://localhost:11434/v1/chat/completions \

-H "Content-Type: application/json" \

-d '{

"model": "llama3",

"messages": [

{

"role": "system",

"content": "You are a helpful assistant."

},

{

"role": "user",

"content": "Hello!"

}

]

}'OpenAI Python 库

from openai import OpenAI

client = OpenAI(

base_url = 'http://localhost:11434/v1',

api_key='ollama', # required, but unused

)

response = client.chat.completions.create(

model="llama3",

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "Who won the world series in 2020?"},

{"role": "assistant", "content": "The LA Dodgers won in 2020."},

{"role": "user", "content": "Where was it played?"}

]

)

print(response.choices[0].message.content)OpenAI JavaScript 库

mport OpenAI from 'openai'

const openai = new OpenAI({

baseURL: 'http://localhost:11434/v1',

apiKey: 'ollama', // required but unused

})

const completion = await openai.chat.completions.create({

model: 'llama3',

messages: [{ role: 'user', content: 'Why is the sky blue?' }],

})

console.log(completion.choices[0].message.content)示例

Vercel AI SDK

Vercel AI SDK是一个用于构建对话流应用程序的开源库。首先,使用 create-next-app 克隆示例存储库:

npx create-next-app --example https://github.com/vercel/ai/tree/main/examples/next-openai example

cd example然后进行以下两项编辑以app/api/chat/route.ts更新聊天示例以使用 Ollama:

const openai = new OpenAI({

baseURL: 'http://localhost:11434/v1',

apiKey: 'ollama',

});

const response = await openai.chat.completions.create({

model: 'llama2',

stream: true,

messages,

});接下来运行应用程序:

npm run dev最后,在浏览器中通过http://localhost:3000打开示例应用程序:

Autogen

Autogen 是 Microsoft 推出的一款用于构建多智能体应用程序的流行开源框架。例如,我们将使用 Code Llama 模型:

ollama pull codellama安装 Autogen:

pip install pyautogen然后创建一个 Python 脚本 example.py 以将 Ollama 与 Autogen 结合使用:

from autogen import AssistantAgent, UserProxyAgent

config_list = [

{

"model": "codellama",

"base_url": "http://localhost:11434/v1",

"api_key": "ollama",

}

]

assistant = AssistantAgent("assistant", llm_config={"config_list": config_list})

user_proxy = UserProxyAgent("user_proxy", code_execution_config={"work_dir": "coding", "use_docker": False})

user_proxy.initiate_chat(assistant, message="Plot a chart of NVDA and TESLA stock price change YTD.")最后,运行示例让助手编写代码来绘制图表:

python example.pyEndpoints

/v1/chat/completions

Supported features

- Chat completions

- Streaming

- JSON mode

- Reproducible outputs

- Vision

- Function calling

- Logprobs

Supported request fields

-

model -

messages- Text

content - Array of

contentparts

- Text

-

frequency_penalty -

presence_penalty -

response_format -

seed -

stop -

stream -

temperature -

top_p -

max_tokens -

logit_bias -

tools -

tool_choice -

user -

n

默认模型名称

对于依赖于默认 OpenAI 模型名称的工具(例如)gpt-3.5-turbo,使用ollama cp将现有模型名称复制到临时名称:

ollama cp llama3 gpt-3.5-turbo随后,可以在该字段中指定这个新的模型名称model:

curl http://localhost:11434/v1/chat/completions \

-H "Content-Type: application/json" \

-d '{

"model": "gpt-3.5-turbo",

"messages": [

{

"role": "user",

"content": "Hello!"

}

]

}'参考资料:

- OpenAI compatibility:https://ollama.com/blog/openai-compatibility

作者:Jeebiz 创建时间:2024-07-21 19:06

最后编辑:Jeebiz 更新时间:2025-12-13 10:17

最后编辑:Jeebiz 更新时间:2025-12-13 10:17